2022 02Feb 05

The official Nginx docs for caching came in handy: https://www.nginx.com/blog/nginx-caching-guide/

Vegeta is a load-testing tool I found on GitHub.

Vegeta is written in Go, which is one of my favorite languages to not write.

You can sort languages based on "How much of the language must I learn before using a typical FOSS app written in it?"

I've always had good luck with running Go binaries as-is, even on Windows. For Python and Ruby, I've always had bad luck, even on Linux. I either get by with a Docker container (Synapse) or I give up and replace it with something else (I'll never forgive you GitLab, Gitea is so much easier to run!)

Anyway, Vegeta is great, but there isn't a quickstart example in its docs. This mild annoyance is common in FOSS projects - They document every single setting, but they don't think to write down the examples I need to use as a starting point.

I came up with this targets.txt:

GET https://six-five-six-four.com/ptth/servers/aliens_wildland/files/blog/index.html

GET https://six-five-six-four.com/ptth/servers/aliens_wildland/files/blog/media/2022-01Jan/violife-provolone.jpeg

GET https://six-five-six-four.com/ptth/servers/aliens_wildland/files/blog/media/ISS_12-06-20-768x432.jpg

This will fetch the index of the blog and a couple images.

Then I wrote this attack script:

./vegeta attack \

-output output.bin \

-rate 100 \

-duration 30s \

-max-body 0B \

< targets.txt

This tries to hit every target 100 times per second for 30 seconds, discarding

the response body, and writing the results to output.bin.

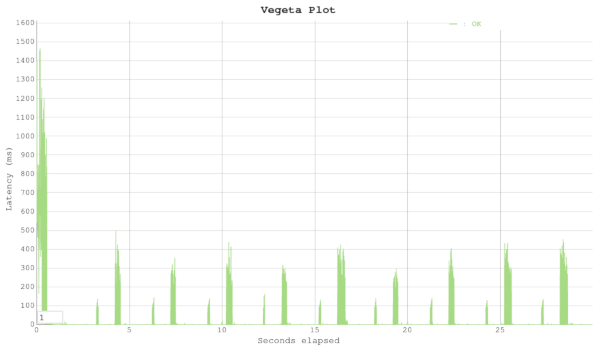

Then I ran ./vegeta plot output.bin > output.html to render the results

into a nice HTML + SVG + JS plot:

You can see that the latencies spike to about 400 ms every 3 seconds when the

max-age expires, and Nginx has to hit PTTH to refresh the cache.

Otherwise, the server is just making requests to itself without

even leaving the datacenter, so it's almost no time at all.

./vegeta report < output.bin prints this nice text-only report, showing that

the cache hits are fast enough to keep the 90th percentile at 4.1 milliseconds:

Requests [total, rate, throughput] 3000, 100.03, 100.03

Duration [total, attack, wait] 29.991s, 29.99s, 1.157ms

Latencies [min, mean, 50, 90, 95, 99, max] 275.52µs, 26.629ms, 768.427µs, 4.115ms, 187.446ms, 589.279ms, 1.468s

Bytes In [total, mean] 0, 0.00

Bytes Out [total, mean] 0, 0.00

Success [ratio] 100.00%

Status Codes [code:count] 200:3000

Error Set:

Great for a blog that is, in spirit, hosted off of my crappy home ISP. I'm just acting as my own 1-node CDN, same as Cloudflare does. Buy me out, CF-senpai!

But as I looked at it, I thought, "I could do better".

The Nginx tutorial I had open already showed how: Allow Nginx to return stale data while updating its cache in the background.

This has the very nice upside that during a slashdotting, no request blocks on the slow origin server. Nginx serves them stale responses while slowly hitting the origin server async.

It has the downside for me, as the author of the blog, that I have to refresh everything twice while editing - The first Firefox refresh gives me stale cached data and kicks off a request to the origin server, and the second refresh (probably) gets the edited data that's just been pulled into Nginx's cache.

Blogs are "Write-few, read-many" loads, so this is perfect for a blog. PTTH can also be used for "Write-once, read-few" loads like sharing art assets within a game jam team. But this logic is all contained within Nginx. So I made the right decision not to implement any caching in the PTTH relay - It doesn't know anything that Nginx doesn't also know.

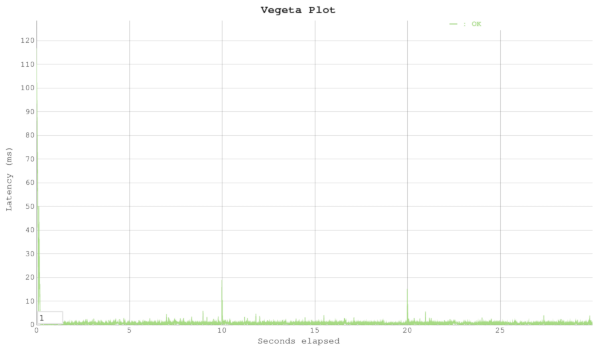

Here's the plot after allowing stale responses and background updates:

There are no more 400-ms spikes. After the first few request warm up Nginx and PTTH, everyone gets a fast cache hit from Nginx for as long as the attack goes on.